Maia 200: The AI accelerator built for inference

1 min readOptimized AI systems

At the systems level, Maia 200 introduces a novel, two-tier scale-up network design built on standard Ethernet. A custom transport layer and tightly integrated NIC unlocks performance, strong reliability and significant cost advantages without relying on proprietary fabrics.

Each accelerator exposes:

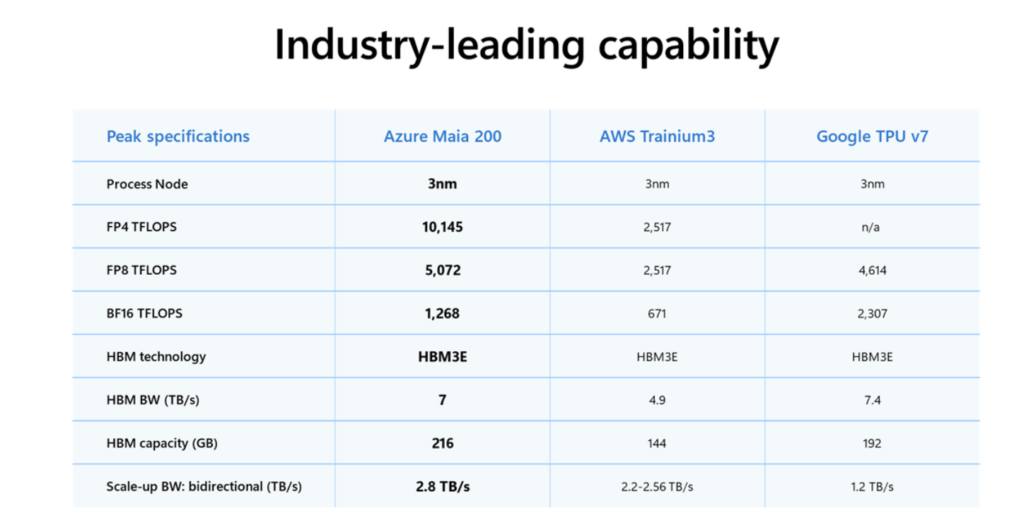

- 2.8 TB/s of bidirectional, dedicated scaleup bandwidth

- Predictable, high-performance collective operations across clusters of up to 6,144 accelerators

This architecture delivers scalable performance for dense inference clusters while reducing power usage and overall TCO across Azure’s global fleet.