DeepSeek mHC: Manifold-Constrained Hyper-Connections

1 min readResearchers have introduced a new neural network framework that improves large-scale AI training by restoring stability lost in recent architectural advances. The approach, called Manifold-Constrained Hyper-Connections (mHC), builds on Hyper-Connections while addressing their core weaknesses, delivering stronger performance, better scalability, and more efficient training—key factors as foundation models continue to grow in size and complexity.

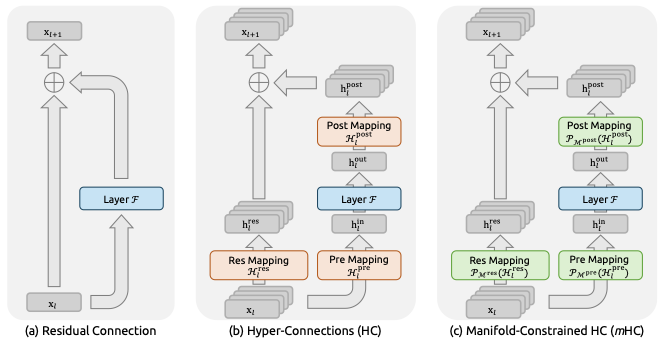

Recent research has expanded the standard residual-connection architecture through approaches like Hyper-Connections (HC), which widen the residual stream and introduce more complex connectivity patterns. While these changes improve performance, they also break a core property of residual networks—identity mapping—leading to training instability, limited scalability, and higher memory overhead.

To address these issues, researchers introduced Manifold-Constrained Hyper-Connections (mHC), a new framework that restores identity mapping by projecting Hyper-Connections onto a constrained manifold. The approach also includes infrastructure-level optimizations to maintain efficiency.

Experimental results show that mHC enables stable, large-scale training while delivering meaningful performance gains and improved scalability. Researchers expect mHC to serve as a practical extension of Hyper-Connections and to advance understanding of how network topology influences the design of next-generation foundation models.