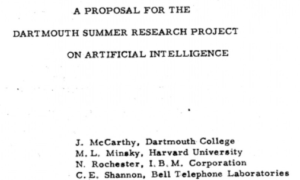

Let’s start with the basic fundamentals of AI. What better place to start than the origins of the term “Artificial Intelligence”.

History Lesson

1956 – The Dartmouth Conference: The Dartmouth Summer Research Project on Artificial Intelligence was a seminal event for artificial intelligence as a field and is widely accepted as the coining of the term “Artificial Intelligence”.

> Human: Define “artificial intelligence”

> HAL: Artificial Intelligence (AI) refers to the development of computer systems that can perform tasks that typically require human intelligence. It is a broad field of study that encompasses various subfields, such as machine learning, natural language processing, computer vision, and robotics.

While this milestone was the beginning of “AI” as we know it, the concept has been around for much longer. In fact, greek mythology suggests a concept of “intelligent automata” back in BC times. They called it “Talos”. Talos was a bronze autonomous robot that circled the island of Crete three times a day to protect them from invaders. That’s the “Talos” backstory for Cisco’s threat intelligence group of modern day cyber security threat hunters and protectors.

Now, let’s see how this amazing field of study has evolved over the last 50+ years.

AI Milestones

1958 – Perceptron: Frank Rosenblatt developed the perceptron, a type of neural network capable of learning and making decisions based on inputs. It was one of the earliest examples of machine learning.

1966 – ELIZA: Developed by Joseph Weizenbaum, ELIZA was a computer program that simulated conversation using simple pattern matching and substitution rules. It demonstrated the potential of natural language processing and human-computer interaction.

1981 – Expert Systems: Expert systems emerged, which were rule-based systems designed to mimic human expertise in specific domains. MYCIN, developed at Stanford University, was a notable example used for diagnosing bacterial infections.

1997 – Deep Blue: IBM’s Deep Blue chess-playing computer defeated world chess champion Garry Kasparov. It showcased the potential of AI in strategic decision-making and marked a significant achievement in machine learning and game-playing algorithms.

2006 – Deep Learning: Geoff Hinton and his team made breakthroughs in deep learning algorithms, particularly in training deep neural networks using large amounts of data. This paved the way for significant advancements in computer vision and speech recognition.

2011 – Watson: IBM’s Watson system competed and won against human champions on the quiz show “Jeopardy!” Watson demonstrated advancements in natural language processing, information retrieval, and machine learning.

2012 – ImageNet: The ImageNet Large Scale Visual Recognition Challenge revolutionized computer vision by introducing large-scale datasets for object recognition. Convolutional neural networks (CNNs), such as AlexNet, achieved groundbreaking accuracy and marked a turning point in image classification tasks.

2014 – Generative Adversarial Networks (GANs): Ian Goodfellow introduced GANs, a framework for training generative models. GANs have since been used for tasks like image synthesis, style transfer, and data augmentation.

2016 – AlphaGo: DeepMind’s AlphaGo defeated world champion Go player Lee Sedol. It showcased the power of reinforcement learning and marked a significant milestone in AI, as Go was considered a game requiring high-level human intuition.

2018 – Natural Language Processing: OpenAI’s GPT (Generative Pre-trained Transformer) model made significant strides in natural language processing tasks, such as text generation, language translation, and question-answering.

2020 – GPT-3: OpenAI released GPT-3, a highly advanced language model capable of generating coherent and contextually relevant text. GPT-3 showcased the potential of large-scale language models and raised awareness about ethical considerations surrounding AI.

2020 – Microsoft T-NLG: Microsoft introduced its Turing Natural Language Generation (T-NLG), which was then the “largest language model ever published at 17 billion parameters.” As well as an Open Source project called DeepSpeed.

2022 -GPT-3.5: While it gains considerable praise for the breadth of its knowledge base, deductive abilities, and the human-like fluidity of its natural language responses. It also garners criticism for, among other things, its tendency to “hallucinate“, a phenomenon in which an AI responds with factually incorrect answers with high confidence. The release triggers widespread public discussion on artificial intelligence and its potential impact on society.

2023 – GPT-4: Unlike previous iterations, GPT-4 is multimodal, allowing image input as well as text. GPT-4 is integrated into ChatGPT as a subscriber service. OpenAI claims that in their own testing the model received a score of 1410 on the SAT (94th percentile), 163 on the LSAT (88th percentile), and 298 on the Uniform Bar Exam (90th percentile).

2023 – Google Bard: In response to ChatGPT, Google releases in a limited capacity its chatbot Google Bard, based on the LaMDA family of large language models, in March 2023.

I’m going to paraphrase Al from the Deadpool movies.

“Listen to the PAST, it’s both a history teacher and a fortune teller”.

So, that brings us to current (at the time of writing, June 2023) affairs. However, before we talk about the future of AI. I’d like to take a slight detour.

Let’s discuss key fundamentals and the nomenclature of modern AI systems.

Nomenclature of Modern AI Systems:

AI can be classified into two categories:

Narrow AI (weak AI) systems are designed to perform specific tasks, such as facial recognition, voice assistants, or recommendation algorithms.

General AI (strong AI) refers to highly autonomous systems that possess the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence. General AI remains an area of ongoing research and development.

The AI from 2001: A Space Odyssey, called Heuristically programmed ALgorithmic computer (HAL) 9000 is an example of General AI.

If you play video games, you will know there’s no shortage of DUMB non-player characters (NPCs). Some are so dumb they only can perform a single task (like walking or doing the same thing over and over). That’s an example of a Narrow AI and my youngest son loves when I go into “dumb NPC” mode in a public setting. My wife on the other hand, hates it.

AI Learning

Models: Remember my example of Talos the Greek autonomous robot protector? Now imagine if that robot didn’t have a brain and it was just a shell. An AI model is similar to the human brain. We want the robot to circle the island three times every day and look for invaders. Once it detects these invaders, it must engage and fight them off to protect the island. These actions are Talos instructions or goals. Without the brain/model (a special kind of computer program that can learn and make decisions to accomplish its goals), we wouldn’t have the necessary logic to carry out the automated tasks Talos was intended for. A model must be trained. Training the AI model is similar to teaching the human brain.

1) Show the model images of the pirate invaders and teach it that these are part of its instructions, they are what you are protecting the island against.

2) Keep feeding it more and more images so the accuracy of the actual pirates is high and the chance for misclassification/identification is low.

3) Continue to teach the model just as we would teach a human brain by feeding it more information over time.

Over time, the accuracy and confidence levels increase and we decrease the risk of misclassification. Once the model is trained, it becomes really good at these specific tasks linked to its goals and instructions. Over time the model will learn from new examples and optimize performance on what it has learned.

Machine Learning (ML): Machine learning refers to the subfield of AI. Focused on developing algorithms and models that enable computers to learn from data and make predictions or decisions without explicit programming.

It includes techniques such as supervised learning, unsupervised learning, and reinforcement learning.

- Supervised Learning: In supervised learning, models are trained on labeled data, where each input is associated with a corresponding target output. The model learns to map inputs to outputs by optimizing a loss function, aiming to minimize the discrepancy between predicted and true outputs.

- Unsupervised Learning: Unsupervised learning involves training models on unlabeled data. The goal is to discover underlying patterns, structures, or representations within the data. Common unsupervised learning techniques include clustering, dimensionality reduction, and generative modeling.

- Reinforcement Learning: Reinforcement learning focuses on training agents to make sequential decisions in an environment to maximize cumulative rewards. Agents learn through trial and error, receiving feedback in the form of rewards or penalties for their actions. Reinforcement learning often employs techniques such as value functions, policies, and exploration-exploitation trade-offs.

Neural Networks (NN): Neural networks are computing systems inspired by the structure and function of the human brain. They consist of interconnected nodes (similar to neurons) organized in layers and are used to recognize patterns, make predictions, and perform other AI tasks.

Generative Adversarial Network (GAN): A type of machine learning framework that consists of two neural networks, namely a generator network and a discriminator network, that work in tandem to generate and evaluate data.

The generator and discriminator networks are trained together in a competitive process. As training progresses, the generator aims to improve its ability to generate realistic data that can fool the discriminator, while the discriminator strives to become more accurate in distinguishing between real and fake data.

Deep Learning (DL): Deep learning is a subset of machine learning that utilizes artificial neural networks with multiple layers to extract high-level representations from raw data. Deep learning has been particularly successful in computer vision, natural language processing, and speech recognition tasks.

Transfer Learning: Transfer learning is an approach in machine learning where knowledge acquired from one task or domain is utilized to improve performance on a different but related task or domain. It allows models to leverage pre-trained weights and knowledge, reducing the need for extensive training on new data.

Generative Models (aka Gen AI or Generative AI): Generative models are AI models that learn the underlying probability distribution of a given dataset to generate new samples that resemble the original data. They have been used for tasks such as image synthesis, text generation, and music composition.

Large language Models (LLMs): Advanced AI systems designed to understand and generate human-like text. They are trained on vast amounts of text data and use complex algorithms to process and manipulate language.

These models are called “large” because they have millions or even billions of parameters, which are like little switches that control how the model works. The more parameters a model has, the more complex and powerful it becomes.

Multimodality: Refers to the ability of a model to process and understand multiple types of input data such as text, images, audio, and video at the same time or interchangeably. Like a human can see and talk at the same time.

Foundational Models: These models aren’t just trained for one task, but can be adapted to many.

Think of them as Swiss Army knives of AI, trained broadly and adaptable for many downstream tasks with minimal additional training.

Natural Language Processing (NLP): Natural language processing focuses on enabling computers to understand, interpret, and generate human language. It involves tasks such as language translation, sentiment analysis, named entity recognition, and chatbots.

Computer Vision (CV): Computer vision involves the development of algorithms and systems that enable computers to understand and interpret visual data, such as images and videos. It encompasses tasks like object detection, image recognition, and image segmentation.

Finally, how can we adjust a model after it’s been trained? Well, there’s two ways you can accomplish this.

1) Fine-tuning adjusts a model’s internal weights so it specializes in a specific task. This fundamentally changes the model’s behavior.

2) Prompting the model provides clear instructions within the input to guide behavior without retraining the model. Think of this as taking a model and giving it clear directions on a specific persona you want it to act as. This method fundamentally changes the input, but not the model itself. ChatGPT “GPTs” are a prime example of the prompting the model.

So, to summarize this “Learning” section.

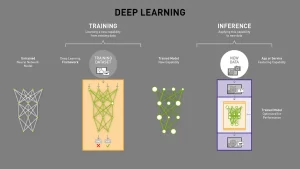

During the training phase, an AI model is exposed to a large dataset with labeled examples, allowing it to learn patterns, relationships, and representations within the data. The model adjusts its internal parameters to minimize errors and optimize its performance on the training data.

Transformers Changed EVERYTHING!

TLDR: Transformers allow AI systems to understand context at scale by processing information in parallel, which is why modern AI suddenly became faster, smarter, and far more capable.

Transformers allow AI systems to understand context at scale by processing information in parallel, which is why modern AI suddenly became faster, smarter, and far more capable.

Up until the late 2010s, most AI systems processed information one step at a time. Models would read text sequentially, word by word, much like a human reading a sentence left to right. This worked, but it was slow, inefficient, and limited in how much context the model could truly understand at once.

Transformers changed that.

Instead of processing information sequentially, transformer-based models look at everything at the same time. They can evaluate relationships between words, sentences, or even images simultaneously, allowing the model to understand context, nuance, and meaning far more effectively. This shift made it possible for AI systems to reason over long documents, hold coherent conversations, and generate responses that feel surprisingly human.

This architectural breakthrough unlocked something equally important: SCALE!

Transformers are highly parallelizable, which means they can efficiently run on modern GPUs and accelerators. As a result, researchers could train models on massive datasets using billions (and now trillions) of parameters. This is why today’s AI systems didn’t just get better, they got dramatically better.

Large Language Models (LLMs), multimodal systems, and foundational models all rely on transformer architectures at their core. Whether an AI system is writing code, analyzing images, generating video, or carrying on a conversation, there’s a very good chance a transformer is doing the heavy lifting behind the scenes.

In short, transformers didn’t just improve AI, they redefined what was possible. They are the backbone of modern AI systems in 2025 and beyond, and they’re the reason today’s models feel less like tools and more like collaborators.

AI Inference

Once the model is trained, it can be deployed for inference. In this phase, the model takes new, unseen input data and applies the learned patterns and rules to generate predictions or outputs. The input data can be in various formats, such as images, text, audio, or numerical values, depending on the specific task the model is designed for.

The inference process involves feeding the input data into the AI model, which then processes the data through its complex algorithms and neural network layers. The model produces an output or prediction based on its learned knowledge and the patterns it recognizes in the input data.

Inference is a crucial step in applying AI models to real-world problems, as it allows the models to generalize their learned knowledge to new, unseen data and provide useful predictions or outputs for decision-making or further actions.

If training is like teaching a student, inference is like testing them with new questions.

AI Prompts

> Human: define an AI prompt

> HAL: An AI prompt, also known as an instruction or query, refers to the input given to an AI system to generate a specific response or perform a desired task. It serves as a starting point or guidance for the AI model to understand the user’s intent and generate a relevant output.

Prompt engineering: Crafting well-formed and precise instructions, is an important aspect of working with AI models to achieve desired results. By providing clear and explicit prompts, users can effectively communicate their intentions and guide the AI model’s behavior.

Here’s a great infographic (provided by Nvidia) on AI and the key elements we discussed.

Human Intelligence (HI) vs Artificial Intelligence (AI)

Artificial General Intelligence (AGI): AGI refers to highly autonomous AI systems that possess general intelligence similar to human intelligence. While AGI is still a hypothetical concept, its potential impact, if realized, could be profound to the human race.

Now let’s not get ahead of ourselves just yet. You see, we humans still have several advantages (for now) vs AI.

- Creativity, innovation, and abstract thinking

- Emotional intelligence

- Common sense and contextual understanding

- Ethics and moral reasoning

- Adaptability

- Intuition and insight

- Brain to body connection, dexterity and sensory perception

- Energy efficiency of the human brain vs complex modern AI systems

That last bullet point is very interesting indeed. You see, the human brain is estimated to consume about 20W of power on average. By comparison, a single A100 GPU by Nvidia consumes 250-400W (depends on the variant). ChatGPT needed 30K of these (training), so if we go with the more power efficient PCIe variant (250W TDP), we are looking at 7.5MW of power. That’s just for the GPUs! We still have servers, HVAC, networking and more to power.

I’d like to leave you with one closing thought on HALs AI fundamental primer.

If Sci-Fi becomes a reality and AGI moves past the realm of theory. We might be able to ask HAL for the answer to “the ultimate question of life, the universe and everything”, do you think we are going to get this response or something more intriguing?

> HAL: The answer to the ultimate question of life, the universe and everything is… 42!